整理桌面

This commit is contained in:

94

node_modules/neataptic/mkdocs/templates/articles/agario.md

generated

vendored

Normal file

94

node_modules/neataptic/mkdocs/templates/articles/agario.md

generated

vendored

Normal file

@@ -0,0 +1,94 @@

|

||||

description: How to evolve neural networks to play Agar.io with Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: neuro-evolution, agar.io, Neataptic, AI

|

||||

|

||||

Agar.io is quite a simple game to play... well, for humans it is. However is it just as simple for artificial agents? In this article I will tell you how I have constructed a genetic algorithm that evolves neural networks to play in an Agario.io-like environment. The following simulation shows agents that resulted from 1000+ generations of running the algorithm:

|

||||

|

||||

<div id="field" height="500px"></div>

|

||||

|

||||

_Hover your mouse over a blob to see some more info! Source code [here](https://github.com/wagenaartje/agario-ai)_

|

||||

|

||||

As you might have noticed, the genomes are performing quite well, but far from perfect. The genomes shows human-like traits: searching food, avoiding bigger blobs and chasing smaller blobs. However sometimes one genome just runs into a bigger blob for no reason at all. That is because each genome **can only see 3 other blobs and 3 food blobs**. But above all, the settings of the GA are far from optimized. That is why I invite you to optimize the settings, and perform a pull request on this repo.

|

||||

|

||||

### The making of

|

||||

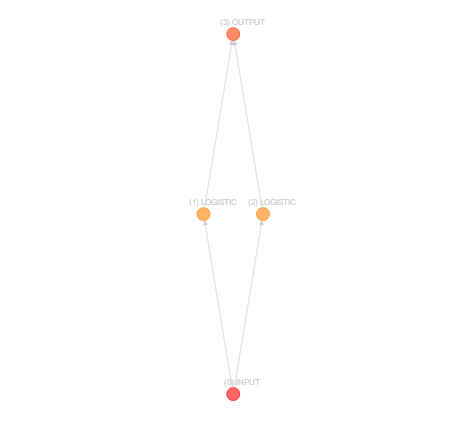

The code consists of 3 main parts: the field, the player and the genetic algorithm. In the following few paragraphs i'll go into depth on this topics, discussing my choices made. At the bottom of this article you will find a list of improvements I have thought of, but not made yet.

|

||||

|

||||

If you have any questions about the code in the linked repo, please create an issue on [this](https://github.com/wagenaartje/neataptic) repo.

|

||||

|

||||

#### The field

|

||||

The field consists of 2 different objects: food and players. Food is stationary, and has no 'brain'. Every piece of food has a static feeding value. Once food has been eaten, it just moves to a new location on the field. Players on the other hand are capable of making decisions through neural networks. They slowly decay in size when not replenished (either by eating other players or food).

|

||||

|

||||

The field has no borders; when a blob hits the left wall, it will 'teleport' to the right wall. During tests with a bordered field, the entire population of genomes tended to stick to one of the walls without ever evolving to a more flexible population. However, having borderless walls comes with a problem of which a fix has not yet been implemented: genomes that are for example near the left wall, won't detect blobs that are close to the right wall - even though the distance between the blobs can be very small.

|

||||

|

||||

**Some (configurable) settings**:

|

||||

|

||||

* There is one food blob per ~2500 pixels

|

||||

* There is one player per ~12500 pixels

|

||||

|

||||

#### The player

|

||||

The player is a simplified version of the player in the real game. A genome can't split and shoot - it can only move. The output of each genomes brain consists of merely a movement direction and movement speed.

|

||||

|

||||

Genomes can't accelerate, they immediately adapt to the speed given by their brain. They can only eat other blobs when they are 10% bigger, and they can move freely through other blobs that are less than 10% bigger. Each genome will only see the 3 closest players and the 3 closest food blobs within a certain radius.

|

||||

|

||||

**Some (configurable) settings**:

|

||||

|

||||

* A player must be 10% bigger than a blob to eat it

|

||||

* The minimal area of a player is 400 pixels

|

||||

* The maximal area of a player is 10000 pixels

|

||||

* The detection radius is 150 pixels

|

||||

* A player can see up to 3 other players in its detection radius

|

||||

* A player can see up to 3 food blobs in its detection radius

|

||||

* The maximum speed of a player is 3px/frame

|

||||

* The minimal speed of a player is 0.6px/frame

|

||||

* Every frame, the player loses 0.2% of its mass

|

||||

|

||||

#### The genetic algorithm

|

||||

The genetic algorithm is the core of the AI. In the first frame, a certain amount of players are initialized with a neural network as brain. The brains represent the population of a generation. These brains are then evolved by putting the entire population in a single playing field and letting them compete against each other. The fittest brains are moved on the next generation, the less fit brains have a high chance of being removed.

|

||||

|

||||

```javascript

|

||||

neat.sort();

|

||||

var newPopulation = [];

|

||||

|

||||

// Elitism

|

||||

for(var i = 0; i < neat.elitism; i++){

|

||||

newPopulation.push(neat.population[i]);

|

||||

}

|

||||

|

||||

// Breed the next individuals

|

||||

for(var i = 0; i < neat.popsize - neat.elitism; i++){

|

||||

newPopulation.push(neat.getOffspring());

|

||||

}

|

||||

|

||||

// Replace the old population with the new population

|

||||

neat.population = newPopulation;

|

||||

neat.mutate();

|

||||

|

||||

neat.generation++;

|

||||

startEvaluation();

|

||||

```

|

||||

|

||||

The above code shows the code run when the evaluation is finished. It is very similar to the built-in evolve() function of Neataptic, however adapted to avoid a fitness function as all genomes must be evaluated at the same time.

|

||||

|

||||

The scoring of the genomes is quite easy: when a certain amount of iterations has been reached, each genome is ranked by their area. Better performing genomes have eaten more blobs, and thus have a bigger area. This scoring is identical to the scoring in Agar.io. I have experimented with other scoring systems, but lots of them stimulated small players to finish themselves off if their score was too low for a certain amount of time.

|

||||

|

||||

**Some (configurable) settings**:

|

||||

|

||||

* An evaluation runs for 1000 frames

|

||||

* The mutation rate is 0.3

|

||||

* The elitism is 10%

|

||||

* Each genome starts with 0 hidden nodes

|

||||

* All mutation methods are allowed

|

||||

|

||||

### Issues/future improvements

|

||||

There are a couple of known issues. However, most of them linked are linked to a future improvement in some way or another.

|

||||

|

||||

**Issues**:

|

||||

|

||||

* Genomes tend to avoid hidden nodes (this is really bad)

|

||||

|

||||

**Future improvements**:

|

||||

|

||||

* Players must be able to detect close players, even if they are on the other side of the field

|

||||

* Players/food should not be spawned at locations occupied by players

|

||||

* The genetic algorithm should be able to run without any visualization

|

||||

* [.. tell me your idea!](https://github.com/wagenaartje/neataptic)

|

||||

97

node_modules/neataptic/mkdocs/templates/articles/classifycolors.md

generated

vendored

Normal file

97

node_modules/neataptic/mkdocs/templates/articles/classifycolors.md

generated

vendored

Normal file

@@ -0,0 +1,97 @@

|

||||

description: Classify different colors through genetic algorithms

|

||||

authors: Thomas Wagenaar

|

||||

keywords: color classification, genetic-algorithm, NEAT, Neataptic

|

||||

|

||||

Classifying is something a neural network can do quite well. In this article

|

||||

I will demonstrate how you can set up the evolution process of a neural network

|

||||

that learns to classify colors with Neataptic.

|

||||

|

||||

Colors:

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="red" checked="true">Red</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="orange">Orange</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="yellow">Yellow</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="green" checked="true">Green</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="blue" checked="true">Blue</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="purple">Purple</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="pink">Pink</label>

|

||||

<label class="checkbox-inline"><input class="colors" type="checkbox" value="monochrome">Monochrome</label>

|

||||

|

||||

<a href="#" class="start" style="text-decoration: none"><span class="glyphicon glyphicon-play"></span> Start evolution</a>

|

||||

|

||||

<pre class="stats">Iteration: <span class="iteration">0</span> Best-fitness: <span class="bestfitness">0</span></pre>

|

||||

<div class="row" style="margin-top: -15px;">

|

||||

<div class="col-md-6">

|

||||

<center><h2 class="blocktext">Set sorted by color</h3></center>

|

||||

<div class="row set" style="padding: 30px; margin-top: -40px; padding-right: 40px;">

|

||||

</div>

|

||||

</div>

|

||||

<div class="col-md-6">

|

||||

<center><h2 class="blocktext">Set sorted by NN</h3></center>

|

||||

<div class="row fittestset" style="padding-left: 40px;">

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<hr>

|

||||

|

||||

### How it works

|

||||

The algorithm to this classification is actually _pretty_ easy. One of my biggest

|

||||

problem was generating the colors, however I stumbled upon [this](https://github.com/davidmerfield/randomColor)

|

||||

Javascript library that allows you to generate colors randomly by name - exactly

|

||||

what I needed (but it also created a problem, read below). So I used it to create

|

||||

a training set:

|

||||

|

||||

```javascript

|

||||

function createSet(){

|

||||

var set = [];

|

||||

|

||||

for(index in COLORS){

|

||||

var color = COLORS[index];

|

||||

|

||||

var randomColors = randomColor({ hue : color, count: PER_COLOR, format: 'rgb'});

|

||||

|

||||

for(var random in randomColors){

|

||||

var rgb = randomColors[random];

|

||||

random = rgb.substring(4, rgb.length-1).replace(/ /g, '').split(',');

|

||||

for(var y in random) random[y] = random[y]/255;

|

||||

|

||||

var output = Array.apply(null, Array(COLORS.length)).map(Number.prototype.valueOf, 0);

|

||||

output[index] = 1;

|

||||

|

||||

set.push({ input: random, output: output, color: color, rgb: rgb});

|

||||

}

|

||||

}

|

||||

|

||||

return set;

|

||||

}

|

||||

```

|

||||

|

||||

_COLORS_ is an array storing all color names in strings. The possible colors are

|

||||

listed above. Next, we convert this rgb string to an array and normalize the

|

||||

values between 0 and 1. Last of all, we normalize the colors using

|

||||

[one-hot encoding](https://www.quora.com/What-is-one-hot-encoding-and-when-is-it-used-in-data-science).

|

||||

Please note that the `color`and `rgb` object attributes are irrelevant for the algorithm.

|

||||

|

||||

```javascript

|

||||

network.evolve(set, {

|

||||

iterations: 1,

|

||||

mutationRate: 0.6,

|

||||

elisitm: 5,

|

||||

popSize: 100,

|

||||

mutation: methods.mutation.FFW,

|

||||

cost: methods.cost.MSE

|

||||

});

|

||||

```

|

||||

|

||||

Now we create the built-in genetic algorithm in neataptic.js. We define

|

||||

that we want to use all possible mutation methods and set the mutation rate

|

||||

higher than normal. Sprinkle in some elitism and double the default population

|

||||

size. Experiment with the parameters yourself, maybe you'll find even better parameters!

|

||||

|

||||

The fitness function is the most vital part of the algorithm. It basically

|

||||

calculates the [Mean Squared Error](https://en.wikipedia.org/wiki/Mean_squared_error)

|

||||

of the entire set. Neataptic saves the programming of this fitness calculation.

|

||||

At the same time the default `growth` parameter is used, so the networks will

|

||||

get penalized for being too large.

|

||||

|

||||

And putting together all this code will create a color classifier.

|

||||

10

node_modules/neataptic/mkdocs/templates/articles/index.md

generated

vendored

Normal file

10

node_modules/neataptic/mkdocs/templates/articles/index.md

generated

vendored

Normal file

@@ -0,0 +1,10 @@

|

||||

description: Articles featuring projects that were created with Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: Neataptic, JavaScript, library

|

||||

|

||||

Welcome to the articles page! Every now and then, articles will be posted here

|

||||

showing for what kind of projects Neataptic _could_ be used. Neataptic is

|

||||

excellent for the development of AI for browser games for example.

|

||||

|

||||

If you want to post your own article here, feel free to create a pull request

|

||||

or an isse on the [repo page](https://github.com/wagenaartje/neataptic)!

|

||||

70

node_modules/neataptic/mkdocs/templates/articles/neuroevolution.md

generated

vendored

Normal file

70

node_modules/neataptic/mkdocs/templates/articles/neuroevolution.md

generated

vendored

Normal file

@@ -0,0 +1,70 @@

|

||||

description: A list of neuro-evolution algorithms set up with Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: genetic-algorithm, Neat, JavaScript, Neataptic, neuro-evolution

|

||||

|

||||

This page shows some neuro-evolution examples. Please note that not every example

|

||||

may always be successful. More may be added in the future!

|

||||

|

||||

<div class="panel panel-warning autocollapse">

|

||||

<div class="panel-heading clickable">

|

||||

1: Uphill and downhill

|

||||

</div>

|

||||

<div class="panel-body">

|

||||

<p class="small">This neural network gets taught to increase the input by 0.2 until 1.0 is reached, then it must decrease the input by 2.0.</p>

|

||||

<button type="button" class="btn btn-default" onclick="showModal(1, 0)">Training set</button>

|

||||

<button type="button" class="btn btn-default" onclick="showModal(1, 1)">Evolve settings</button>

|

||||

<div class="btn-group">

|

||||

<button type="button" class="btn btn-primary" onclick="run(1)">Start</button>

|

||||

<button type="button" class="btn btn-default status1" style="display: none" onclick="showModal(1, 2)">Status</button>

|

||||

<button type="button" class="btn btn-danger error1" style="display: none">Error</button>

|

||||

</div>

|

||||

<svg class="example1" style="display: none"/>

|

||||

</div>

|

||||

</div>

|

||||

<div class="panel panel-warning autocollapse">

|

||||

<div class="panel-heading clickable">

|

||||

2: Count to ten

|

||||

</div>

|

||||

<div class="panel-body">

|

||||

<p class="small">This neural network gets taught to wait 9 inputs of 0, to output 1 at input number 10.</p>

|

||||

<button type="button" class="btn btn-default" onclick="showModal(2, 0)">Training set</button>

|

||||

<button type="button" class="btn btn-default" onclick="showModal(2, 1)">Evolve settings</button>

|

||||

<div class="btn-group">

|

||||

<button type="button" class="btn btn-primary" onclick="run(2)">Start</button>

|

||||

<button type="button" class="btn btn-default status2" style="display: none" onclick="showModal(2, 2)">Status</button>

|

||||

<button type="button" class="btn btn-danger error2" style="display: none">Error</button>

|

||||

</div>

|

||||

<svg class="example2" style="display: none"/>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div class="panel panel-warning autocollapse">

|

||||

<div class="panel-heading clickable">

|

||||

3: Vowel vs. consonants classification

|

||||

</div>

|

||||

<div class="panel-body">

|

||||

<p class="small">This neural network gets taught to classify if a letter of the alphabet is a vowel or not. The data is one-hot-encoded.</p>

|

||||

<button type="button" class="btn btn-default" onclick="showModal(3, 0)">Training set</button>

|

||||

<button type="button" class="btn btn-default" onclick="showModal(3, 1)">Evolve settings</button>

|

||||

<div class="btn-group">

|

||||

<button type="button" class="btn btn-primary" onclick="run(3)">Start</button>

|

||||

<button type="button" class="btn btn-default status3" style="display: none" onclick="showModal(3, 2)">Status</button>

|

||||

<button type="button" class="btn btn-danger error3" style="display: none">Error</button>

|

||||

</div>

|

||||

<svg class="example3" style="display: none"/>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div class="modal fade" id="modal" role="dialog">

|

||||

<div class="modal-dialog">

|

||||

<div class="modal-content">

|

||||

<div class="modal-header">

|

||||

<button type="button" class="close" data-dismiss="modal">×</button>

|

||||

<h4 class="modal-title"></h4>

|

||||

</div>

|

||||

<div class="modal-body">

|

||||

<pre class="modalcontent"></pre>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

82

node_modules/neataptic/mkdocs/templates/articles/playground.md

generated

vendored

Normal file

82

node_modules/neataptic/mkdocs/templates/articles/playground.md

generated

vendored

Normal file

@@ -0,0 +1,82 @@

|

||||

description: Play around with neural-networks built with Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: mutate, neural-network, machine-learning, playground, Neataptic

|

||||

|

||||

<div class="col-md-4">

|

||||

<div class="btn-group btn-group-justified">

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.SUB_NODE)">‌<span class="glyphicon glyphicon-minus"></button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default">Node</button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.ADD_NODE)">‌<span class="glyphicon glyphicon-plus"></button>

|

||||

</div>

|

||||

</div>

|

||||

<div class="btn-group btn-group-justified">

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.SUB_CONN)">‌<span class="glyphicon glyphicon-minus"></button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default">Conn</button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.ADD_CONN)">‌<span class="glyphicon glyphicon-plus"></button>

|

||||

</div>

|

||||

</div>

|

||||

<div class="btn-group btn-group-justified">

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.SUB_GATE)">‌<span class="glyphicon glyphicon-minus"></button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default">Gate</button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.ADD_GATE)">‌<span class="glyphicon glyphicon-plus"></button>

|

||||

</div>

|

||||

</div>

|

||||

<div class="btn-group btn-group-justified">

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.SUB_SELF_CONN)">‌<span class="glyphicon glyphicon-minus"></button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default">Self-conn</button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.ADD_SELF_CONN)">‌<span class="glyphicon glyphicon-plus"></button>

|

||||

</div>

|

||||

</div>

|

||||

<div class="btn-group btn-group-justified">

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.SUB_BACK_CONN)">‌<span class="glyphicon glyphicon-minus"></button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default">Back-conn</button>

|

||||

</div>

|

||||

<div class="btn-group" role="group">

|

||||

<button type="button" class="btn btn-default" onclick="mutate(methods.mutation.ADD_BACK_CONN)">‌<span class="glyphicon glyphicon-plus"></button>

|

||||

</div>

|

||||

</div>

|

||||

<div class="input-group" style="margin-bottom: 15px;">

|

||||

<span class="input-group-addon">input1</span>

|

||||

<input type="number" class="form-control input1" value=0>

|

||||

</div>

|

||||

<div class="input-group" style="margin-bottom: 15px;">

|

||||

<span class="input-group-addon">input2</span>

|

||||

<input type="number" class="form-control input2" value=1>

|

||||

</div>

|

||||

<div class="btn-group btn-group-justified">

|

||||

<div class="btn-group" role="group">

|

||||

<button class="btn btn-warning" onclick="activate()">Activate</button>

|

||||

</div>

|

||||

</div>

|

||||

<pre>Output: <span class="output"></span></pre>

|

||||

|

||||

</div>

|

||||

<div class="col-md-8">

|

||||

<div class="panel panel-default">

|

||||

<svg class="draw" width="100%" height="60%"/>

|

||||

</div>

|

||||

|

||||

</div>

|

||||

135

node_modules/neataptic/mkdocs/templates/articles/targetseeking.md

generated

vendored

Normal file

135

node_modules/neataptic/mkdocs/templates/articles/targetseeking.md

generated

vendored

Normal file

@@ -0,0 +1,135 @@

|

||||

description: Neural agents learn to seek targets through neuro-evolution

|

||||

authors: Thomas Wagenaar

|

||||

keywords: target seeking, AI, genetic-algorithm, NEAT, Neataptic

|

||||

|

||||

In the simulation below, neural networks that have been evolved through roughly

|

||||

100 generations try to seek a target. Their goal is to stay as close to the target

|

||||

as possible at all times. If you want to see how one of these networks looks like,

|

||||

check out the [complete simulation](https://wagenaartje.github.io/target-seeking-ai/).

|

||||

|

||||

<div id="field" height="500px">

|

||||

</div>

|

||||

|

||||

_Click on the field to relocate the target! Source code [here](https://wagenaartje.github.io/target-seeking-ai/)._

|

||||

|

||||

The neural agents are actually performing really well. At least one agent will have

|

||||

'solved the problem' after roughly 20 generations. That is because the base of the solution

|

||||

is quite easy: one of the inputs of the neural networks is the angle to the target, so all it

|

||||

has to do is output some value that is similar to this input value. This can easily be done

|

||||

through the identity activation function, but surprisingly, most agents in the simulation above

|

||||

tend to avoid this function.

|

||||

|

||||

You can check out the topology of the networks [here](https://wagenaartje.github.io/target-seeking-ai/).

|

||||

If you manage to evolve the genomes quicker or better than this simulation with different settings, please

|

||||

perform a pull request on [this](https://wagenaartje.github.io/target-seeking-ai/) repo.

|

||||

|

||||

### The making of

|

||||

|

||||

In the previous article I have gone more into depth on the environment of the algorithm, but in this article

|

||||

I will focus more on the settings and inputs/outputs of the algorithm itself.

|

||||

|

||||

|

||||

If you have any questions about the code in the linked repo, please create an issue on [this](https://github.com/wagenaartje/neataptic) repo.

|

||||

|

||||

|

||||

### The agents

|

||||

The agents' task is very simple. They have to get in the vicinity of the target which is set to about

|

||||

100 pixels, once they are in that vicinity, each agents' score will be increased proportionally `(100 - dist)``

|

||||

to the distance. There is one extra though: for every node in the agents' network, the score of the agent will

|

||||

be decreased. This has two reasons; 1. networks shouldn't overfit the solution and 2. having smaller networks

|

||||

reduces computation power.

|

||||

|

||||

Agents have some kind of momentum. They don't have mass, but they do have acceleration, so it takes a small

|

||||

amount of time for a agent to reach the top speed in a certain direction.

|

||||

|

||||

|

||||

**Each agent has the following inputs**:

|

||||

|

||||

* Its own speed in the x-axis

|

||||

* Its own speed in the y-axis

|

||||

* The targets' speed in the x-axis

|

||||

* The targets' speed in the y-axis

|

||||

* The angle towards the target

|

||||

* The distance to the target

|

||||

|

||||

|

||||

The output of each agent is just the desired movement direction.

|

||||

|

||||

There is no kind of collision, except for the walls of the fields. In the future, it might be interesting to

|

||||

add collisions between multiple agents and/or the target to reveal some new tactics. This would require the

|

||||

agent to know the location of surrounding agents.

|

||||

|

||||

### The target

|

||||

The target is fairly easy. It's programmed to switch direction every now and then by a random amount. There

|

||||

is one important thing however: _the target moves with half the speed of the agents_, this makes sure

|

||||

that agents always have the ability to catch up with the target. Apart from that, the physics for the target

|

||||

are similar to the agents' physics.

|

||||

|

||||

### The genetic algorithm

|

||||

|

||||

The genetic algorithm is the core of the AI. In the first frame, a certain

|

||||

amount of players are initialized with a neural network as brain. The brains

|

||||

represent the population of a generation. These brains are then evolved by

|

||||

putting the entire population in óne playing field and letting them compete

|

||||

against each other. The fittest brains are moved on the next generation,

|

||||

the less fit brains have a high chance of being removed.

|

||||

|

||||

```javascript

|

||||

// Networks shouldn't get too big

|

||||

for(var genome in neat.population){

|

||||

genome = neat.population[genome];

|

||||

genome.score -= genome.nodes.length * SCORE_RADIUS / 10;

|

||||

}

|

||||

|

||||

// Sort the population by score

|

||||

neat.sort();

|

||||

|

||||

// Draw the best genome

|

||||

drawGraph(neat.population[0].graph($('.best').width(), $('.best').height()), '.best', false);

|

||||

|

||||

// Init new pop

|

||||

var newPopulation = [];

|

||||

|

||||

// Elitism

|

||||

for(var i = 0; i < neat.elitism; i++){

|

||||

newPopulation.push(neat.population[i]);

|

||||

}

|

||||

|

||||

// Breed the next individuals

|

||||

for(var i = 0; i < neat.popsize - neat.elitism; i++){

|

||||

newPopulation.push(neat.getOffspring());

|

||||

}

|

||||

|

||||

// Replace the old population with the new population

|

||||

neat.population = newPopulation;

|

||||

neat.mutate();

|

||||

|

||||

neat.generation++;

|

||||

startEvaluation();

|

||||

```

|

||||

|

||||

The above code shows the code run when the evaluation is finished. It is very similar

|

||||

to the built-in `evolve()` function of Neataptic, however adapted to avoid a fitness

|

||||

function as all genomes must be evaluated at the same time.

|

||||

|

||||

The scoring of the genomes is quite easy: when a certain amount of iterations has been reached,

|

||||

each genome is ranked by their final score. Genomes with a higher score have a small amount of nodes

|

||||

and have been close to the target throughout the iteration.

|

||||

|

||||

**Some (configurable) settings**:

|

||||

|

||||

* An evaluation runs for 250 frames

|

||||

* The mutation rate is 0.3

|

||||

* The elitism is 10%

|

||||

* Each genome starts with 0 hidden nodes

|

||||

* All mutation methods are allowed

|

||||

|

||||

### Issues/future improvements

|

||||

* ... none yet! [Tell me your ideas!](https://github.com/wagenaartje/neataptic)

|

||||

|

||||

**Forks**

|

||||

|

||||

* [corpr8's fork](https://corpr8.github.io/neataptic-targetseeking-tron/)

|

||||

gives each neural agent its own acceleration, as well as letting each arrow

|

||||

remain in the same place after each generation. This creates a much more

|

||||

'fluid' process.

|

||||

230

node_modules/neataptic/mkdocs/templates/docs/NEAT.md

generated

vendored

Normal file

230

node_modules/neataptic/mkdocs/templates/docs/NEAT.md

generated

vendored

Normal file

@@ -0,0 +1,230 @@

|

||||

description: Documentation of the Neuro-evolution of Augmenting Topologies technique in Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: neuro-evolution, NEAT, genetic=algorithm

|

||||

|

||||

The built-in NEAT class allows you create evolutionary algorithms with just a few lines of code. If you want to evolve neural networks to conform a given dataset, check out [this](https://github.com/wagenaartje/neataptic/wiki/Network#functions) page. The following code is from the [Agario-AI](https://github.com/wagenaartje/agario-ai) built with Neataptic.

|

||||

|

||||

```javascript

|

||||

/** Construct the genetic algorithm */

|

||||

function initNeat(){

|

||||

neat = new Neat(

|

||||

1 + PLAYER_DETECTION * 3 + FOOD_DETECTION * 2,

|

||||

2,

|

||||

null,

|

||||

{

|

||||

mutation: methods.mutation.ALL

|

||||

popsize: PLAYER_AMOUNT,

|

||||

mutationRate: MUTATION_RATE,

|

||||

elitism: Math.round(ELITISM_PERCENT * PLAYER_AMOUNT),

|

||||

network: new architect.Random(

|

||||

1 + PLAYER_DETECTION * 3 + FOOD_DETECTION * 2,

|

||||

START_HIDDEN_SIZE,

|

||||

2

|

||||

)

|

||||

}

|

||||

);

|

||||

|

||||

if(USE_TRAINED_POP) neat.population = population;

|

||||

}

|

||||

|

||||

/** Start the evaluation of the current generation */

|

||||

function startEvaluation(){

|

||||

players = [];

|

||||

highestScore = 0;

|

||||

|

||||

for(var genome in neat.population){

|

||||

genome = neat.population[genome];

|

||||

new Player(genome);

|

||||

}

|

||||

}

|

||||

|

||||

/** End the evaluation of the current generation */

|

||||

function endEvaluation(){

|

||||

console.log('Generation:', neat.generation, '- average score:', neat.getAverage());

|

||||

|

||||

neat.sort();

|

||||

var newPopulation = [];

|

||||

|

||||

// Elitism

|

||||

for(var i = 0; i < neat.elitism; i++){

|

||||

newPopulation.push(neat.population[i]);

|

||||

}

|

||||

|

||||

// Breed the next individuals

|

||||

for(var i = 0; i < neat.popsize - neat.elitism; i++){

|

||||

newPopulation.push(neat.getOffspring());

|

||||

}

|

||||

|

||||

// Replace the old population with the new population

|

||||

neat.population = newPopulation;

|

||||

neat.mutate();

|

||||

|

||||

neat.generation++;

|

||||

startEvaluation();

|

||||

}

|

||||

```

|

||||

|

||||

You might also want to check out the [target-seeking project](https://github.com/wagenaartje/target-seeking-ai) built with Neataptic.

|

||||

|

||||

## Options

|

||||

The constructor comes with various options. The constructor works as follows:

|

||||

|

||||

```javascript

|

||||

new Neat(input, output, fitnessFunction, options); // options should be an object

|

||||

```

|

||||

|

||||

Every generation, each genome will be tested on the `fitnessFunction`. The

|

||||

fitness function should return a score (a number). Through evolution, the

|

||||

genomes will try to _maximize_ the output of the fitness function.

|

||||

|

||||

Negative scores are allowed.

|

||||

|

||||

You can provide the following options in an object for the `options` argument:

|

||||

|

||||

<details>

|

||||

<summary>popsize</summary>

|

||||

Sets the population size of each generation. Default is 50.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>elitism</summary>

|

||||

Sets the <a href="https://www.researchgate.net/post/What_is_meant_by_the_term_Elitism_in_the_Genetic_Algorithm">elitism</a> of every evolution loop. Default is 0.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>provenance</summary>

|

||||

Sets the provenance of the genetic algorithm. Provenance means that during every evolution, the given amount of genomes will be inserted which all have the original

|

||||

network template (which is <code>Network(input,output)</code> when no <code>network</code> option is given). Default is 0.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>mutation</summary>

|

||||

Sets the allowed <a href="https://wagenaartje.github.io/neataptic/docs/methods/mutation/">mutation methods</a> used in the evolutionary process. Must be an array (e.g. <code>[methods.mutation.ADD_NODE, methods.mutation.SUB_NODE]</code>). Default mutation methods are all non-recurrent mutation methods. A random mutation method will be chosen from the array when mutation occrus.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>selection</summary>

|

||||

Sets the allowed <a href="https://wagenaartje.github.io/neataptic/docs/methods/selection/">selection method</a> used in the evolutionary process. Must be a single method (e.g. <code>Selection.FITNESS_PROPORTIONATE</code>). Default is <code>FITNESS_PROPORTIONATE</code>.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>crossover</summary>

|

||||

Sets the allowed crossover methods used in the evolutionary process. Must be an array. <b>disabled as of now</b>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>fitnessPopulation</summary>

|

||||

If set to <code>true</code>, you will have to specify a fitness function that

|

||||

takes an array of genomes as input and sets their <code>.score</code> property.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>mutationRate</summary>

|

||||

Sets the mutation rate. If set to <code>0.3</code>, 30% of the new population will be mutated. Default is <code>0.3</code>.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>mutationAmount</summary>

|

||||

If mutation occurs (<code>randomNumber < mutationRate</code>), sets the amount of times a mutation method will be applied to the network. Default is <code>1</code>.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>network</summary>

|

||||

If you want to start the algorithm from a specific network, specify your network here.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>equal</summary>

|

||||

If set to true, all networks will be viewed equal during crossover. This stimulates more diverse network architectures. Default is <code>false</code>.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>clear</summary>

|

||||

Clears the context of the network before activating the fitness function. Should be applied to get consistent outputs from recurrent networks. Default is <code>false</code>.

|

||||

</details>

|

||||

|

||||

## Properties

|

||||

There are only a few properties

|

||||

|

||||

<details>

|

||||

<summary>input</summary>

|

||||

The amount of input neurons each genome has

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>output</summary>

|

||||

The amount of output neurons each genome has

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>fitness</summary>

|

||||

The fitness function that is used to evaluate genomes

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>generation</summary>

|

||||

Generation counter

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>population</summary>

|

||||

An array containing all the genomes of the current generation

|

||||

</details>

|

||||

|

||||

## Functions

|

||||

There are a few built-in functions. For the client, only `getFittest()` and `evolve()` is important. In the future, there will be a combination of backpropagation and evolution. Stay tuned

|

||||

|

||||

<details>

|

||||

<summary>createPool()</summary>

|

||||

Initialises the first set of genomes. Should not be called manually.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><i>async</i> evolve()</summary>

|

||||

Loops the generation through a evaluation, selection, crossover and mutation process.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><i>async</i> evaluate()</summary>

|

||||

Evaluates the entire population by passing on the genome to the fitness function and taking the score.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>sort()</summary>

|

||||

Sorts the entire population by score. Should be called after <code>evaluate()</code>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>getFittest()</summary>

|

||||

Returns the fittest genome (highest score) of the current generation

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>mutate()</summary>

|

||||

Mutates genomes in the population, each genome has <code>mutationRate</code> chance of being mutated.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>getOffspring()</summary>

|

||||

This function selects two genomes from the population with <code>getParent()</code>, and returns the offspring from those parents.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>getAverage()</summary>

|

||||

Returns the average fitness of the current population

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>getParent()</summary>

|

||||

Returns a parent selected using one of the selection methods provided. Should be called after evaluation. Should not be called manually.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>export()</summary>

|

||||

Exports the current population of the set up algorithm to a list containing json objects of the networks. Can be used later with <code>import(json)</code> to reload the population

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>import(json)</summary>

|

||||

Imports population from a json. Must be an array of networks that have converted to json (with <code>myNetwork.toJSON()</code>)

|

||||

</details>

|

||||

10

node_modules/neataptic/mkdocs/templates/docs/architecture/architecture.md

generated

vendored

Normal file

10

node_modules/neataptic/mkdocs/templates/docs/architecture/architecture.md

generated

vendored

Normal file

@@ -0,0 +1,10 @@

|

||||

If you want to built your completely custom neural network; this is the place to be.

|

||||

You can build your network from the bottom, using nodes and connections, or you can

|

||||

ease up the process by using groups and layers.

|

||||

|

||||

* [Connection](connection.md)

|

||||

* [Node](node.md)

|

||||

* [Group](group.md)

|

||||

* [Layer](layer.md)

|

||||

* [Network](network.md)

|

||||

* [Construct](construct.md)

|

||||

30

node_modules/neataptic/mkdocs/templates/docs/architecture/connection.md

generated

vendored

Normal file

30

node_modules/neataptic/mkdocs/templates/docs/architecture/connection.md

generated

vendored

Normal file

@@ -0,0 +1,30 @@

|

||||

description: Documentation of the Connection instance in Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: connection, neural-network, architecture, synapse, weight

|

||||

|

||||

A connection instance defines the connection between two nodes. All you have to do is pass on a from and to node, and optionally a weight.

|

||||

|

||||

```javascript

|

||||

var B = new Node();

|

||||

var C = new Node();

|

||||

var connection = new Connection(A, B, 0.5);

|

||||

```

|

||||

|

||||

Connection properties:

|

||||

|

||||

Property | contains

|

||||

-------- | --------

|

||||

from | connection origin node

|

||||

to | connection destination node

|

||||

weight | the weight of the connection

|

||||

gater | the node gating this connection

|

||||

gain | for gating, gets multiplied with weight

|

||||

|

||||

### Connection methods

|

||||

There are three connection methods:

|

||||

|

||||

* **methods.connection.ALL_TO_ALL** connects all nodes from group `x` to all nodes from group `y`

|

||||

* **methods.connection.ALL_TO_ELSE** connects every node from group `x` to all nodes in the same group except itself

|

||||

* **methods.connection.ONE_TO_ONE** connects every node in group `x` to one node in group `y`

|

||||

|

||||

Every one of these connection methods can also be used on the group itself! (`x.connect(x, METHOD)`)

|

||||

47

node_modules/neataptic/mkdocs/templates/docs/architecture/construct.md

generated

vendored

Normal file

47

node_modules/neataptic/mkdocs/templates/docs/architecture/construct.md

generated

vendored

Normal file

@@ -0,0 +1,47 @@

|

||||

description: Documentation on how to construct your own network with Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: neural-network, architecture, node, build, connection

|

||||

|

||||

|

||||

For example, I want to have a network that looks like a square:

|

||||

|

||||

```javascript

|

||||

var A = new Node();

|

||||

var B = new Node();

|

||||

var C = new Node();

|

||||

var D = new Node();

|

||||

|

||||

// Create connections

|

||||

A.connect(B);

|

||||

A.connect(C);

|

||||

B.connect(D);

|

||||

C.connect(D);

|

||||

|

||||

// Construct a network

|

||||

var network = architect.Construct([A, B, C, D]);

|

||||

```

|

||||

|

||||

And voila, basically a square, but stretched out, right?

|

||||

|

||||

|

||||

|

||||

The `construct()` function looks for nodes that have no input connections, and labels them as an input node. The same for output nodes: it looks for nodes without an output connection (and gating connection), and labels them as an output node!

|

||||

|

||||

**You can also create networks with groups!** This speeds up the creation process and saves lines of code.

|

||||

|

||||

```javascript

|

||||

// Initialise groups of nodes

|

||||

var A = new Group(4);

|

||||

var B = new Group(2);

|

||||

var C = new Group(6);

|

||||

|

||||

// Create connections between the groups

|

||||

A.connect(B);

|

||||

A.connect(C);

|

||||

B.connect(C);

|

||||

|

||||

// Construct a network

|

||||

var network = architect.Construct([A, B, C, D]);

|

||||

```

|

||||

|

||||

Keep in mind that you must always specify your input groups/nodes in **activation order**. Input and output nodes will automatically get sorted out, but all hidden nodes will be activated in the order that they were given.

|

||||

115

node_modules/neataptic/mkdocs/templates/docs/architecture/group.md

generated

vendored

Normal file

115

node_modules/neataptic/mkdocs/templates/docs/architecture/group.md

generated

vendored

Normal file

@@ -0,0 +1,115 @@

|

||||

description: Documentation of the Group instance in Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: group, neurons, nodes, neural-network, activation

|

||||

|

||||

|

||||

A group instance denotes a group of nodes. Beware: once a group has been used to construct a network, the groups will fall apart into individual nodes. They are purely for the creation and development of networks. A group can be created like this:

|

||||

|

||||

```javascript

|

||||

// A group with 5 nodes

|

||||

var A = new Group(5);

|

||||

```

|

||||

|

||||

Group properties:

|

||||

|

||||

Property | contains

|

||||

-------- | --------

|

||||

nodes | an array of all nodes in the group

|

||||

connections | dictionary with connections

|

||||

|

||||

### activate

|

||||

Will activate all the nodes in the network.

|

||||

|

||||

```javascript

|

||||

myGroup.activate();

|

||||

|

||||

// or (array length must be same length as nodes in group)

|

||||

myGroup.activate([1, 0, 1]);

|

||||

```

|

||||

|

||||

### propagate

|

||||

Will backpropagate all nodes in the group, make sure the group receives input from another group or node!

|

||||

|

||||

```javascript

|

||||

myGroup.propagate(rate, momentum, target);

|

||||

```

|

||||

|

||||

The target argument is optional. An example would be:

|

||||

|

||||

```javascript

|

||||

var A = new Group(2);

|

||||

var B = new Group(3);

|

||||

|

||||

A.connect(B);

|

||||

|

||||

A.activate([1,0]); // set the input

|

||||

B.activate(); // get the output

|

||||

|

||||

// Then teach the network with learning rate and wanted output

|

||||

B.propagate(0.3, 0.9, [0,1]);

|

||||

```

|

||||

|

||||

The default value for momentum is `0`. Read more about momentum on the

|

||||

[regularization page](../methods/regularization.md).

|

||||

|

||||

### connect

|

||||

Creates connections between this group and another group or node. There are different connection methods for groups, check them out [here](connection.md).

|

||||

|

||||

```javascript

|

||||

var A = new Group(4);

|

||||

var B = new Group(5);

|

||||

|

||||

A.connect(B, methods.connection.ALL_TO_ALL); // specifying a method is optional

|

||||

```

|

||||

|

||||

### disconnect

|

||||

(not yet implemented)

|

||||

|

||||

### gate

|

||||

Makes the nodes in a group gate an array of connections between two other groups. You have to specify a gating method, which can be found [here](../methods/gating.md).

|

||||

|

||||

```javascript

|

||||

var A = new Group(2);

|

||||

var B = new Group(6);

|

||||

|

||||

var connections = A.connect(B);

|

||||

|

||||

var C = new Group(2);

|

||||

|

||||

// Gate the connections between groups A and B

|

||||

C.gate(connections, methods.gating.INPUT);

|

||||

```

|

||||

|

||||

### set

|

||||

Sets the properties of all nodes in the group to the given values, e.g.:

|

||||

|

||||

```javascript

|

||||

var group = new Group(4);

|

||||

|

||||

// All nodes in 'group' now have a bias of 1

|

||||

group.set({bias: 1});

|

||||

```

|

||||

|

||||

### disconnect

|

||||

Disconnects the group from another group or node. Can be twosided.

|

||||

|

||||

```javascript

|

||||

var A = new Group(4);

|

||||

var B = new Node();

|

||||

|

||||

// Connect them

|

||||

A.connect(B);

|

||||

|

||||

// Disconnect them

|

||||

A.disconnect(B);

|

||||

|

||||

// Twosided connection

|

||||

A.connect(B);

|

||||

B.connect(A);

|

||||

|

||||

// Disconnect from both sides

|

||||

A.disconnect(B, true);

|

||||

```

|

||||

|

||||

### clear

|

||||

Clears the context of the group. Useful for predicting timeseries with LSTM's.

|

||||

77

node_modules/neataptic/mkdocs/templates/docs/architecture/layer.md

generated

vendored

Normal file

77

node_modules/neataptic/mkdocs/templates/docs/architecture/layer.md

generated

vendored

Normal file

@@ -0,0 +1,77 @@

|

||||

description: Documentation of the Layer instance in Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: LSTM, GRU, architecture, neural-network, recurrent

|

||||

|

||||

|

||||

Layers are pre-built architectures that allow you to combine different network

|

||||

architectures into óne network. At this moment, there are 3 layers (more to come soon!):

|

||||

|

||||

```javascript

|

||||

Layer.Dense

|

||||

Layer.LSTM

|

||||

Layer.GRU

|

||||

Layer.Memory

|

||||

```

|

||||

|

||||

Check out the options and details for each layer below.

|

||||

|

||||

### Constructing your own network with layers

|

||||

You should always start your network with a `Dense` layer and always end it with

|

||||

a `Dense` layer. You can connect layers with each other just like you can connect

|

||||

nodes and groups with each other. This is an example of a custom architecture

|

||||

built with layers:

|

||||

|

||||

```javascript

|

||||

var input = new Layer.Dense(1);

|

||||

var hidden1 = new Layer.LSTM(5);

|

||||

var hidden2 = new Layer.GRU(1);

|

||||

var output = new Layer.Dense(1);

|

||||

|

||||

// connect however you want

|

||||

input.connect(hidden1);

|

||||

hidden1.connect(hidden2);

|

||||

hidden2.connect(output);

|

||||

|

||||

var network = architect.Construct([input, hidden1, hidden2, output]);

|

||||

```

|

||||

|

||||

### Layer.Dense

|

||||

The dense layer is a regular layer.

|

||||

|

||||

```javascript

|

||||

var layer = new Layer.Dense(size);

|

||||

```

|

||||

|

||||

### Layer.LSTM

|

||||

The LSTM layer is very useful for detecting and predicting patterns over long

|

||||

time lags. This is a recurrent layer. More info? Check out the [LSTM](../builtins/lstm.md) page.

|

||||

|

||||

```javascript

|

||||

var layer = new Layer.LSTM(size);

|

||||

```

|

||||

|

||||

Be aware that using `Layer.LSTM` is worse than using `architect.LSTM`. See issue [#25](https://github.com/wagenaartje/neataptic/issues/25).

|

||||

|

||||

### Layer.GRU

|

||||

The GRU layer is similar to the LSTM layer, however it has no memory cell and only

|

||||

two gates. It is also a recurrent layer that is excellent for timeseries prediction.

|

||||

More info? Check out the [GRU](../builtins/gru.md) page.

|

||||

|

||||

```javascript

|

||||

var layer = new Layer.GRU(size);

|

||||

```

|

||||

|

||||

### Layer.Memory

|

||||

The Memory layer is very useful if you want your network to remember a number of

|

||||

previous inputs in an absolute way. For example, if you set the `memory` option to

|

||||

3, it will remember the last 3 inputs in the same state as they were inputted.

|

||||

|

||||

```javascript

|

||||

var layer = new Layer.Memory(size, memory);

|

||||

```

|

||||

|

||||

The input layer to the memory layer should always have the same size as the memory size.

|

||||

The memory layer will output a total of `size * memory` values.

|

||||

|

||||

> This page is incomplete. There is no description on the functions you can use

|

||||

on this instance yet. Feel free to add the info (check out src/layer.js)

|

||||

269

node_modules/neataptic/mkdocs/templates/docs/architecture/network.md

generated

vendored

Normal file

269

node_modules/neataptic/mkdocs/templates/docs/architecture/network.md

generated

vendored

Normal file

@@ -0,0 +1,269 @@

|

||||

description: Documentation of the network model in Neataptic

|

||||

authors: Thomas Wagenaar

|

||||

keywords: neural-network, recurrent, layers, neurons, connections, input, output, activation

|

||||

|

||||

Networks are very easy to create. All you have to do is specify an `input` size and an `output` size.

|

||||

|

||||

```javascript

|

||||

// Network with 2 input neurons and 1 output neuron

|

||||

var myNetwork = new Network(2, 1);

|

||||

|

||||

// If you want to create multi-layered networks

|

||||

var myNetwork = new architect.Perceptron(5, 20, 10, 5, 1);

|

||||

```

|

||||

|

||||

If you want to create more advanced networks, check out the 'Networks' tab on the left.

|

||||

|

||||

|

||||

|

||||

### Functions

|

||||

Check out the [train](../important/train.md) and [evolve](../important/evolve.md) functions on their separate pages!

|

||||

|

||||

<details>

|

||||

<summary>activate</summary>

|

||||

Activates the network. It will activate all the nodes in activation order and produce an output.

|

||||

|

||||

<pre>

|

||||

// Create a network

|

||||

var myNetwork = new Network(3, 2);

|

||||

|

||||

myNetwork.activate([0.8, 1, 0.21]); // gives: [0.49, 0.51]

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>noTraceActivate</summary>

|

||||

Activates the network. It will activate all the nodes in activation order and produce an output.

|

||||

Does not calculate traces, so backpropagation is not possible afterwards. That makes

|

||||

it faster than the regular `activate` function.

|

||||

|

||||

<pre>

|

||||

// Create a network

|

||||

var myNetwork = new Network(3, 2);

|

||||

|

||||

myNetwork.noTraceActivate([0.8, 1, 0.21]); // gives: [0.49, 0.51]

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>propagate</summary>

|

||||

This function allows you to teach the network. If you want to do more complex

|

||||

training, use the <code>network.train()</code> function. The arguments for

|

||||

this function are:

|

||||

|

||||

<pre>

|

||||

myNetwork.propagate(rate, momentum, update, target);

|

||||

</pre>

|

||||

|

||||

Where target is optional. The default value of momentum is `0`. Read more about

|

||||

momentum on the [regularization page](../methods/regularization.md). If you run

|

||||

propagation without setting update to true, then the weights won't update. So if

|

||||

you run propagate 3x with `update: false`, and then 1x with `update: true` then

|

||||

the weights will be updated after the last propagation, but the deltaweights of

|

||||

the first 3 propagation will be included too.

|

||||

|

||||

<pre>

|

||||

var myNetwork = new Network(1,1);

|

||||

|

||||

// This trains the network to function as a NOT gate

|

||||

for(var i = 0; i < 1000; i++){

|

||||

network.activate([0]);

|

||||

network.propagate(0.2, 0, true, [1]);

|

||||

|

||||

network.activate([1]);

|

||||

network.propagate(0.3, 0, true, [0]);

|

||||

}

|

||||

</pre>

|

||||

|

||||

The above example teaches the network to output <code>[1]</code> when input <code>[0]</code> is given and the other way around. Main usage:

|

||||

|

||||

<pre>

|

||||

network.activate(input);

|

||||

network.propagate(learning_rate, momentum, update_weights, desired_output);

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>merge</summary>

|

||||

The merge functions takes two networks, the output size of <code>network1</code> should be the same size as the input of <code>network2</code>. Merging will always be one to one to conserve the purpose of the networks. Usage:

|

||||

|

||||

<pre>

|

||||

var XOR = architect.Perceptron(2,4,1); // assume this is a trained XOR

|

||||

var NOT = architect.Perceptron(1,2,1); // assume this is a trained NOT

|

||||

|

||||

// combining these will create an XNOR

|

||||

var XNOR = Network.merge(XOR, NOT);

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>connect</summary>

|

||||

Connects two nodes in the network:

|

||||

|

||||

<pre>

|

||||

myNetwork.connect(myNetwork.nodes[4], myNetwork.nodes[5]);

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>remove</summary>

|

||||

Removes a node from a network, all its connections will be redirected. If it gates a connection, the gate will be removed.

|

||||

|

||||

<pre>

|

||||

myNetwork = new architect.Perceptron(1,4,1);

|

||||

|

||||

// Remove a node

|

||||

myNetwork.remove(myNetwork.nodes[2]);

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>disconnect</summary>

|

||||

Disconnects two nodes in the network:

|

||||

|

||||

<pre>

|

||||

myNetwork.disconnect(myNetwork.nodes[4], myNetwork.nodes[5]);

|

||||

// now node 4 does not have an effect on the output of node 5 anymore

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>gate</summary>

|

||||

Makes a network node gate a connection:

|

||||

|

||||

<pre>

|

||||

myNetwork.gate(myNetwork.nodes[1], myNetwork.connections[5]

|

||||

</pre>

|

||||

|

||||

Now the weight of connection 5 is multiplied with the activation of node 1!

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>ungate</summary>

|

||||

Removes a gate from a connection:

|

||||

|

||||

<pre>

|

||||

myNetwork = new architect.Perceptron(1, 4, 2);

|

||||

|

||||

// Gate a connection

|

||||

myNetwork.gate(myNetwork.nodes[2], myNetwork.connections[5]);

|

||||

|

||||

// Remove the gate from the connection

|

||||

myNetwork.ungate(myNetwork.connections[5]);

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>mutate</summary>

|

||||

Mutates the network. See [mutation methods](../methods/mutation.md).

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>serialize</summary>

|

||||

Serializes the network to 3 <code>Float64Arrays</code>. Used for transferring

|

||||

networks to other threads fast.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>toJSON/fromJSON</summary>

|

||||

Networks can be stored as JSON's and then restored back:

|

||||

|

||||

<pre>

|

||||

var exported = myNetwork.toJSON();

|

||||

var imported = Network.fromJSON(exported);

|

||||

</pre>

|

||||

|

||||

<code>imported</code> will be a new instance of <code>Network</code> that is an exact clone of <code>myNetwork</code>.

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>standalone</summary>

|

||||

Networks can be used in Javascript without the need of the Neataptic library,

|

||||

this function will transform your network into a function accompanied by arrays.

|

||||

|

||||

<pre>

|

||||

var myNetwork = new architect.Perceptron(2,4,1);

|

||||

myNetwork.activate([0,1]); // [0.24775789809]

|

||||

|

||||

// a string

|

||||

var standalone = myNetwork.standalone();

|

||||

|

||||

// turns your network into an 'activate' function

|

||||

eval(standalone);

|

||||

|

||||

// calls the standalone function

|

||||

activate([0,1]);// [0.24775789809]

|

||||

</pre>

|

||||

|

||||

The reason an `eval` is being called is because the standalone can't be a simply

|

||||

a function, it needs some kind of global memory. You can easily copy and paste the

|

||||

result of `standalone` in any JS file and run the `activate` function!

|

||||

|

||||

Note that this is still in development, so for complex networks, it might not be

|

||||

precise.

|

||||

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary>crossOver</summary>

|

||||

Creates a new 'baby' network from two parent networks. Networks are not required to have the same size, however input and output size should be the same!

|

||||

|

||||

<pre>

|

||||

// Initialise two parent networks

|

||||

var network1 = new architect.Perceptron(2, 4, 3);

|

||||

var network2 = new architect.Perceptron(2, 4, 5, 3);

|

||||

|

||||

// Produce an offspring

|

||||

var network3 = Network.crossOver(network1, network2);

|

||||

</pre>

|

||||

</details>

|

||||

|

||||

<details>

|

||||