+

+### Neataptic

+

+

+

+### Neataptic

+

+ +

+ +

+ +

+

+

+

+

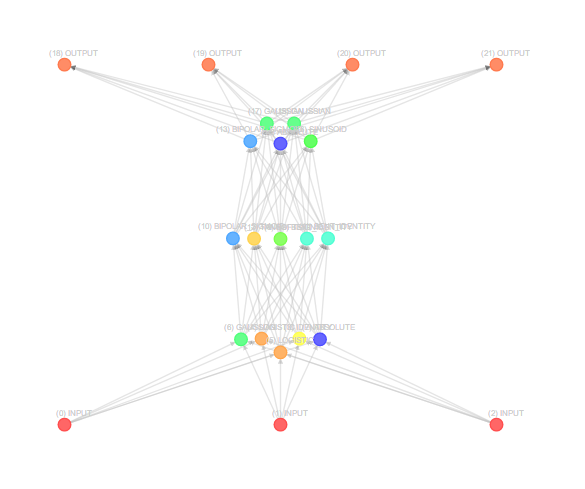

++ +Neataptic offers flexible neural networks; neurons and synapses can be removed with a single line of code. No fixed architecture is required for neural networks to function at all. This flexibility allows networks to be shaped for your dataset through neuro-evolution, which is done using multiple threads. + +```js +// this network learns the XOR gate (through neuro-evolution) +var network = new Network(2,1); + +var trainingSet = [ + { input: [0,0], output: [0] }, + { input: [0,1], output: [1] }, + { input: [1,0], output: [1] }, + { input: [1,1], output: [0] } +]; + +await network.evolve(trainingSet, { + equal: true, + error: 0.03 + }); +``` + +Neataptic also backpropagates more than 5x faster than competitors. [Run the tests yourself](https://jsfiddle.net/tuet004f/11/). This is an example of regular training in Neataptic: + +```js +// this network learns the XOR gate (through backpropagation) +var network = new architect.Perceptron(2, 4, 1); + +// training set same as in above example +network.train(trainingSet, { + error: 0.01 +}); + +network.activate([1,1]); // 0.9824... +``` + +Use any of the 6 built-in networks with customisable sizes to create a network: + +```javascript +var myNetwork = new architect.LSTM(1, 10, 5, 1); +``` + +Or built your own network with pre-built layers: + +```javascript +var input = new Layer.Dense(2); +var hidden1 = new Layer.LSTM(5); +var hidden2 = new Layer.GRU(3); +var output = new Layer.Dense(1); + +input.connect(hidden1); +hidden1.connect(hidden2); +hidden2.connect(output); + +var myNetwork = architect.Construct([input, hidden1, hidden2, output]); +``` + +You can even built your network neuron-by-neuron using nodes and groups! + +

Visit the wiki to get started

+or play around with neural networks

+

+

+## Examples

+Neural networks can be used for nearly anything; driving a car, playing a game and even to predict words! At this moment,

+the website only displays a small amount of examples. If you have an interesting project that you want to share with other users

+of Neataptic, feel free to create a pull request!

+

+

+

+## Examples

+Neural networks can be used for nearly anything; driving a car, playing a game and even to predict words! At this moment,

+the website only displays a small amount of examples. If you have an interesting project that you want to share with other users

+of Neataptic, feel free to create a pull request!

+

+Neuroevolution examples (supervised)

+LSTM timeseries (supervised)

+Color classification (supervised)

+Agar.io-AI (unsupervised)

+Target seeking AI (unsupervised)

+Crossover playground

++ +You made it all the way down! If you appreciate this repo and want to support the development of it, please consider donating :thumbsup: +[](https://www.paypal.com/cgi-bin/webscr?cmd=_s-xclick&hosted_button_id=CXS3G8NHBYEZE) diff --git a/node_modules/neataptic/dist/neataptic.js b/node_modules/neataptic/dist/neataptic.js new file mode 100644 index 0000000..b1eb7de --- /dev/null +++ b/node_modules/neataptic/dist/neataptic.js @@ -0,0 +1,4509 @@ +/*! + * The MIT License (MIT) + * + * Copyright 2017 Thomas Wagenaar

404: Page not found

+If something is wrong, please create an issue here!

+Agar.io AI

+ ++

Agar.io is quite a simple game to play... well, for humans it is. However is it just as simple for artificial agents? In this article I will tell you how I have constructed a genetic algorithm that evolves neural networks to play in an Agario.io-like environment. The following simulation shows agents that resulted from 1000+ generations of running the algorithm:

+ + +Hover your mouse over a blob to see some more info! Source code here

+As you might have noticed, the genomes are performing quite well, but far from perfect. The genomes shows human-like traits: searching food, avoiding bigger blobs and chasing smaller blobs. However sometimes one genome just runs into a bigger blob for no reason at all. That is because each genome can only see 3 other blobs and 3 food blobs. But above all, the settings of the GA are far from optimized. That is why I invite you to optimize the settings, and perform a pull request on this repo.

+The making of

+The code consists of 3 main parts: the field, the player and the genetic algorithm. In the following few paragraphs i'll go into depth on this topics, discussing my choices made. At the bottom of this article you will find a list of improvements I have thought of, but not made yet.

+If you have any questions about the code in the linked repo, please create an issue on this repo.

+The field

+The field consists of 2 different objects: food and players. Food is stationary, and has no 'brain'. Every piece of food has a static feeding value. Once food has been eaten, it just moves to a new location on the field. Players on the other hand are capable of making decisions through neural networks. They slowly decay in size when not replenished (either by eating other players or food).

+The field has no borders; when a blob hits the left wall, it will 'teleport' to the right wall. During tests with a bordered field, the entire population of genomes tended to stick to one of the walls without ever evolving to a more flexible population. However, having borderless walls comes with a problem of which a fix has not yet been implemented: genomes that are for example near the left wall, won't detect blobs that are close to the right wall - even though the distance between the blobs can be very small.

+Some (configurable) settings:

+-

+

- There is one food blob per ~2500 pixels +

- There is one player per ~12500 pixels +

The player

+The player is a simplified version of the player in the real game. A genome can't split and shoot - it can only move. The output of each genomes brain consists of merely a movement direction and movement speed.

+Genomes can't accelerate, they immediately adapt to the speed given by their brain. They can only eat other blobs when they are 10% bigger, and they can move freely through other blobs that are less than 10% bigger. Each genome will only see the 3 closest players and the 3 closest food blobs within a certain radius.

+Some (configurable) settings:

+-

+

- A player must be 10% bigger than a blob to eat it +

- The minimal area of a player is 400 pixels +

- The maximal area of a player is 10000 pixels +

- The detection radius is 150 pixels +

- A player can see up to 3 other players in its detection radius +

- A player can see up to 3 food blobs in its detection radius +

- The maximum speed of a player is 3px/frame +

- The minimal speed of a player is 0.6px/frame +

- Every frame, the player loses 0.2% of its mass +

The genetic algorithm

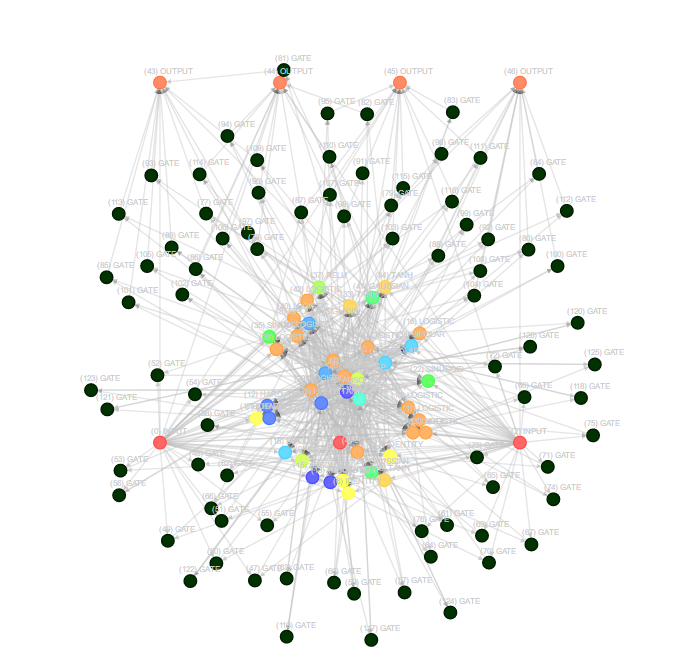

+The genetic algorithm is the core of the AI. In the first frame, a certain amount of players are initialized with a neural network as brain. The brains represent the population of a generation. These brains are then evolved by putting the entire population in a single playing field and letting them compete against each other. The fittest brains are moved on the next generation, the less fit brains have a high chance of being removed.

+neat.sort();

+var newPopulation = [];

+

+// Elitism

+for(var i = 0; i < neat.elitism; i++){

+ newPopulation.push(neat.population[i]);

+}

+

+// Breed the next individuals

+for(var i = 0; i < neat.popsize - neat.elitism; i++){

+ newPopulation.push(neat.getOffspring());

+}

+

+// Replace the old population with the new population

+neat.population = newPopulation;

+neat.mutate();

+

+neat.generation++;

+startEvaluation();

+The above code shows the code run when the evaluation is finished. It is very similar to the built-in evolve() function of Neataptic, however adapted to avoid a fitness function as all genomes must be evaluated at the same time.

+The scoring of the genomes is quite easy: when a certain amount of iterations has been reached, each genome is ranked by their area. Better performing genomes have eaten more blobs, and thus have a bigger area. This scoring is identical to the scoring in Agar.io. I have experimented with other scoring systems, but lots of them stimulated small players to finish themselves off if their score was too low for a certain amount of time.

+Some (configurable) settings:

+-

+

- An evaluation runs for 1000 frames +

- The mutation rate is 0.3 +

- The elitism is 10% +

- Each genome starts with 0 hidden nodes +

- All mutation methods are allowed +

Issues/future improvements

+There are a couple of known issues. However, most of them linked are linked to a future improvement in some way or another.

+Issues:

+-

+

- Genomes tend to avoid hidden nodes (this is really bad) +

Future improvements:

+-

+

- Players must be able to detect close players, even if they are on the other side of the field +

- Players/food should not be spawned at locations occupied by players +

- The genetic algorithm should be able to run without any visualization +

- .. tell me your idea! +

Classify colors

+ ++

Classifying is something a neural network can do quite well. In this article +I will demonstrate how you can set up the evolution process of a neural network +that learns to classify colors with Neataptic.

+Colors: + + + + + + + +

+ +Iteration: 0 Best-fitness: 0+ +

Set sorted by color

Set sorted by NN

+ +

How it works

+The algorithm to this classification is actually pretty easy. One of my biggest +problem was generating the colors, however I stumbled upon this +Javascript library that allows you to generate colors randomly by name - exactly +what I needed (but it also created a problem, read below). So I used it to create +a training set:

+function createSet(){

+ var set = [];

+

+ for(index in COLORS){

+ var color = COLORS[index];

+

+ var randomColors = randomColor({ hue : color, count: PER_COLOR, format: 'rgb'});

+

+ for(var random in randomColors){

+ var rgb = randomColors[random];

+ random = rgb.substring(4, rgb.length-1).replace(/ /g, '').split(',');

+ for(var y in random) random[y] = random[y]/255;

+

+ var output = Array.apply(null, Array(COLORS.length)).map(Number.prototype.valueOf, 0);

+ output[index] = 1;

+

+ set.push({ input: random, output: output, color: color, rgb: rgb});

+ }

+ }

+

+ return set;

+}

+COLORS is an array storing all color names in strings. The possible colors are

+listed above. Next, we convert this rgb string to an array and normalize the

+values between 0 and 1. Last of all, we normalize the colors using

+one-hot encoding.

+Please note that the colorand rgb object attributes are irrelevant for the algorithm.

network.evolve(set, {

+ iterations: 1,

+ mutationRate: 0.6,

+ elisitm: 5,

+ popSize: 100,

+ mutation: methods.mutation.FFW,

+ cost: methods.cost.MSE

+});

+Now we create the built-in genetic algorithm in neataptic.js. We define +that we want to use all possible mutation methods and set the mutation rate +higher than normal. Sprinkle in some elitism and double the default population +size. Experiment with the parameters yourself, maybe you'll find even better parameters!

+The fitness function is the most vital part of the algorithm. It basically

+calculates the Mean Squared Error

+of the entire set. Neataptic saves the programming of this fitness calculation.

+At the same time the default growth parameter is used, so the networks will

+get penalized for being too large.

And putting together all this code will create a color classifier.

+Articles

+ ++

Welcome to the articles page! Every now and then, articles will be posted here +showing for what kind of projects Neataptic could be used. Neataptic is +excellent for the development of AI for browser games for example.

+If you want to post your own article here, feel free to create a pull request +or an isse on the repo page!

+Neuroevolution

+ ++

This page shows some neuro-evolution examples. Please note that not every example +may always be successful. More may be added in the future!

+This neural network gets taught to increase the input by 0.2 until 1.0 is reached, then it must decrease the input by 2.0.

+ + +This neural network gets taught to wait 9 inputs of 0, to output 1 at input number 10.

+ + +This neural network gets taught to classify if a letter of the alphabet is a vowel or not. The data is one-hot-encoded.

+ + +Playground

+ ++

Output:+ +

Target-seeking AI

+ ++

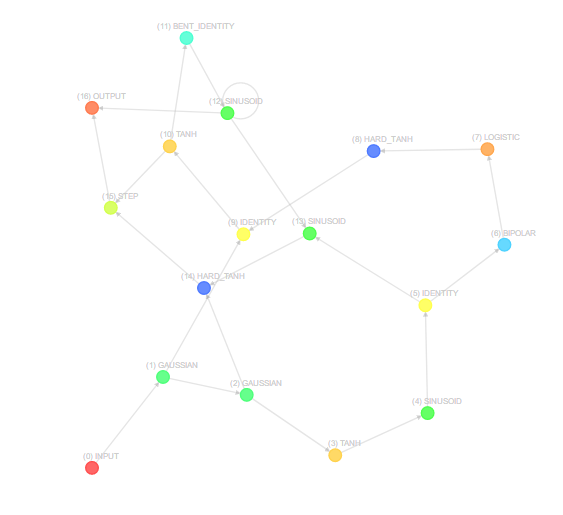

In the simulation below, neural networks that have been evolved through roughly +100 generations try to seek a target. Their goal is to stay as close to the target +as possible at all times. If you want to see how one of these networks looks like, +check out the complete simulation.

+Click on the field to relocate the target! Source code here.

+The neural agents are actually performing really well. At least one agent will have +'solved the problem' after roughly 20 generations. That is because the base of the solution +is quite easy: one of the inputs of the neural networks is the angle to the target, so all it +has to do is output some value that is similar to this input value. This can easily be done +through the identity activation function, but surprisingly, most agents in the simulation above +tend to avoid this function.

+You can check out the topology of the networks here. +If you manage to evolve the genomes quicker or better than this simulation with different settings, please +perform a pull request on this repo.

+The making of

+In the previous article I have gone more into depth on the environment of the algorithm, but in this article +I will focus more on the settings and inputs/outputs of the algorithm itself.

+If you have any questions about the code in the linked repo, please create an issue on this repo.

+The agents

+The agents' task is very simple. They have to get in the vicinity of the target which is set to about +100 pixels, once they are in that vicinity, each agents' score will be increased proportionally `(100 - dist)`` +to the distance. There is one extra though: for every node in the agents' network, the score of the agent will +be decreased. This has two reasons; 1. networks shouldn't overfit the solution and 2. having smaller networks +reduces computation power.

+Agents have some kind of momentum. They don't have mass, but they do have acceleration, so it takes a small +amount of time for a agent to reach the top speed in a certain direction.

+Each agent has the following inputs:

+-

+

- Its own speed in the x-axis +

- Its own speed in the y-axis +

- The targets' speed in the x-axis +

- The targets' speed in the y-axis +

- The angle towards the target +

- The distance to the target +

The output of each agent is just the desired movement direction.

+There is no kind of collision, except for the walls of the fields. In the future, it might be interesting to +add collisions between multiple agents and/or the target to reveal some new tactics. This would require the +agent to know the location of surrounding agents.

+The target

+The target is fairly easy. It's programmed to switch direction every now and then by a random amount. There +is one important thing however: the target moves with half the speed of the agents, this makes sure +that agents always have the ability to catch up with the target. Apart from that, the physics for the target +are similar to the agents' physics.

+The genetic algorithm

+The genetic algorithm is the core of the AI. In the first frame, a certain +amount of players are initialized with a neural network as brain. The brains +represent the population of a generation. These brains are then evolved by +putting the entire population in óne playing field and letting them compete +against each other. The fittest brains are moved on the next generation, +the less fit brains have a high chance of being removed.

+// Networks shouldn't get too big

+for(var genome in neat.population){

+ genome = neat.population[genome];

+ genome.score -= genome.nodes.length * SCORE_RADIUS / 10;

+}

+

+// Sort the population by score

+neat.sort();

+

+// Draw the best genome

+drawGraph(neat.population[0].graph($('.best').width(), $('.best').height()), '.best', false);

+

+// Init new pop

+var newPopulation = [];

+

+// Elitism

+for(var i = 0; i < neat.elitism; i++){

+ newPopulation.push(neat.population[i]);

+}

+

+// Breed the next individuals

+for(var i = 0; i < neat.popsize - neat.elitism; i++){

+ newPopulation.push(neat.getOffspring());

+}

+

+// Replace the old population with the new population

+neat.population = newPopulation;

+neat.mutate();

+

+neat.generation++;

+startEvaluation();

+The above code shows the code run when the evaluation is finished. It is very similar

+to the built-in evolve() function of Neataptic, however adapted to avoid a fitness

+function as all genomes must be evaluated at the same time.

The scoring of the genomes is quite easy: when a certain amount of iterations has been reached, +each genome is ranked by their final score. Genomes with a higher score have a small amount of nodes +and have been close to the target throughout the iteration.

+Some (configurable) settings:

+-

+

- An evaluation runs for 250 frames +

- The mutation rate is 0.3 +

- The elitism is 10% +

- Each genome starts with 0 hidden nodes +

- All mutation methods are allowed +

Issues/future improvements

+-

+

- ... none yet! Tell me your ideas! +

Forks

+-

+

- corpr8's fork +gives each neural agent its own acceleration, as well as letting each arrow +remain in the same place after each generation. This creates a much more +'fluid' process. +